AI Digest: AGI is coming, tutorial by Andrej Karpathy and free content on DeAI

Insights on the Path to AGI, Deep Learning Tutorial, and DeAI Updates

In this edition of EPIC AI NEWSLETTER, we've compiled key materials released over the past week. Discover the latest advancements in AGI, a tutorial by Andrej Karpathy, and free resources on DeAI.

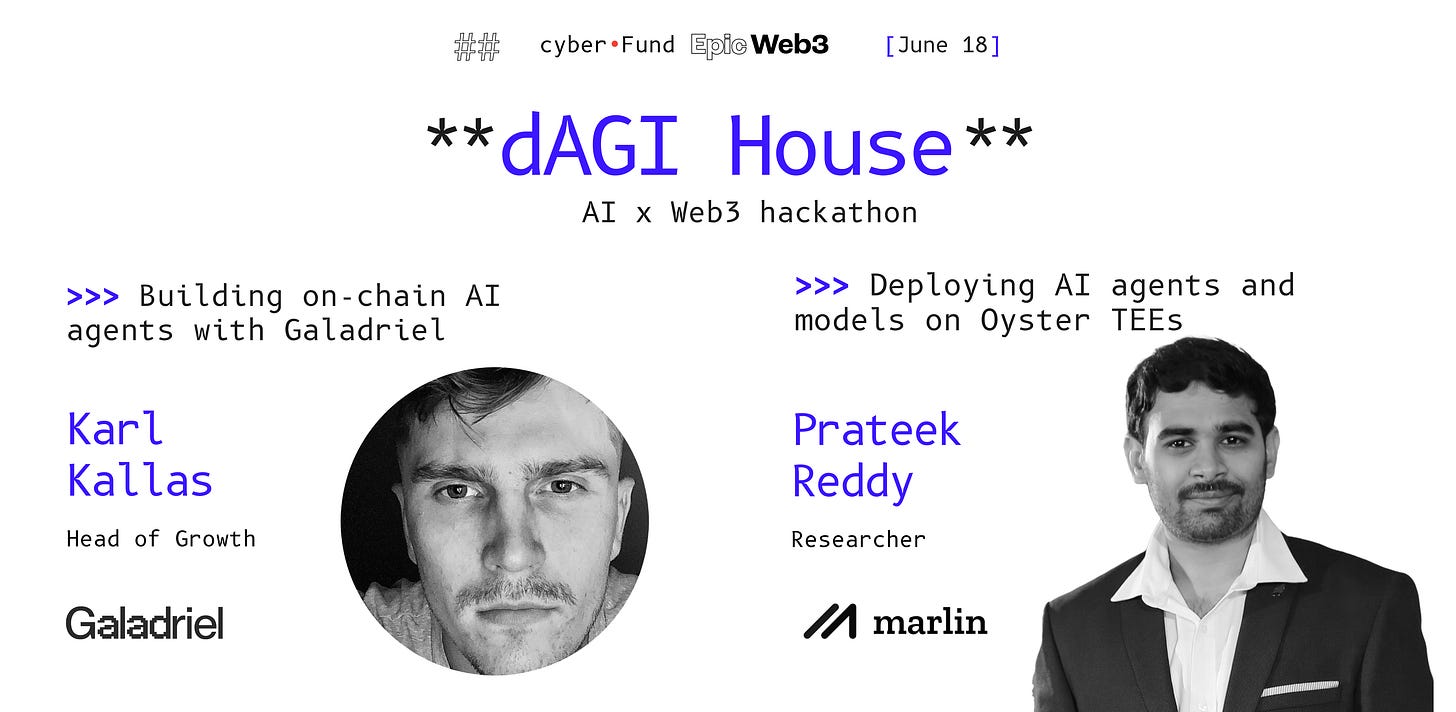

👨🏻💻 dAGI House AI x Web3 Hackathon powered by cyber•Fund

Two exciting days full of interesting talks and workshops have already taken place at our AIxWeb3 hackathon. Speakers from companies such as Seed Club Ventures, Pond, Flashbots, NVIDIA, Super Protocol and Gnosis discussed a variety of topics, including:

– Hacking DeAI session

– Real-time AI processing workshops

– Building AI agents with Pond's GNN model

– Using AI Agents for prediction markets

– How Small Language Models help create a Human-Driven Data Economy,

And more!

You can watch the recordings for free on our YouTube channel.

Tomorrow, we have a day of workshops lined up:

– "Building on-chain AI agents with Galadriel" by Karl Kallas, Head of Growth at Galadriel

– "Deploying AI agents and models on Oyster TEEs" by Prateek Reddy, Researcher at Marlin

Participation is free, just register to get the link. All session recordings will also be available on our YouTube.

In a few weeks, the main hackathon event will begin. We'll be building autonomous agents, interfaces for on-chain interactions, natural language or voice-enabled intents for wallet tasks, and using AI to improve security and automate auditing. Join us just before EthCC to bring your ideas to life, compete for a share of the $100k prize pool, and launch your product.

We've opened up a travel scholarship of up to $1,000 for the devs with the most impressive portfolios.

🚨 AGI is coming: Ex-OpenAI researcher's vision for the next decade

Leopold Aschenbrenner (a researcher fired from OpenAI for an information leak), has published a 165-page essay on what to expect from AI in the next decade.

It covers everything from scaling laws and model development predictions to alignment issues and the behavior of leading labs as they approach AGI.

The possibility that AGI could be realized by 2027 is based on rapid improvements in computational power and algorithmic efficiency. The paper highlights the economic and industrial mobilization required, the strategic importance of securing AI against state actors, the challenges of controlling superintelligent systems, and the potential impact on society.

Key Points:

Exponential Growth in Compute Power:

"GPT-2 to GPT-4 took us from ~preschooler to ~smart high-schooler abilities in 4 years. Tracing trendlines in compute (~0.5 orders of magnitude or OOMs/year), algorithmic efficiencies (~0.5 OOMs/year), and 'unhobbling' gains (from chatbot to agent), we should expect another preschooler-to-high-schooler-sized qualitative jump by 2027"

Industrial Mobilization and Investment:

"Achieving AGI will likely require a substantial economic and industrial mobilization. The document suggests that by the end of the decade, investments in AI could reach up to $1 trillion annually, driving the development of large-scale GPU clusters and datacenters necessary for training advanced AI models".

Security and Geopolitical Implications:

The author highlights the importance of securing AI research against espionage and adversarial state actors. "As AGI approaches, the national security implications become paramount, with calls for tighter security measures to protect AI advancements from being exploited by rival nations".

Potential for Recursive Self-Improvement:

"Once AGI is achieved, these systems could automate AI research, leading to an "intelligence explosion" where AI capabilities grow exponentially. This could compress decades of algorithmic progress into a few years, rapidly transitioning from human-level intelligence to superintelligence".

Leopold also recently guested on the Dwarkesh podcast, which we've mentioned before. The 4-hour interview covers topics such as:

– The race to build a $1 trillion power cluster

– Predictions for 2028

– Recent developments at OpenAI

– Chinese espionage in AGI labs

🛠 Andrej Karpathy has published a tutorial on how to create an LLM from scratch

During a 4-hour session, he creates GPT-Nano from scratch while explaining each step. Currently implemented in Python and focused solely on pre-training, he plans to release a tutorial on developing LLM and another on fine-tuning.

“The video ended up so long because it is... comprehensive: we start with an empty file and end up with a GPT-2 (124M) model:

– First, we build the GPT-2 network

– Then we optimize it to train very fast

– Then we set up the training run optimization and hyperparameters by referencing GPT-2 and GPT-3 papers

– Then we bring up model evaluation, and

– Then cross our fingers and go to sleep.In the morning we look through the results and enjoy amusing model generations. Our "overnight" run even gets very close to the GPT-3 (124M) model. This video builds on the Zero To Hero series and at times references previous videos. You could also see this video as building my nanoGPT repo, which by the end is about 90% similar.” – X

That wraps it up for today! 👋 But before you go...

Check out our LinkedIn, Facebook or Twitter pages for more details. Follow us to stay updated on all the latest news!

Best,

Epic AI Dev team.