AI Digest: Open-Source LLM Platforms, New Retrieval Techniques, TOKEN2049 & More

This week in the Epic AI Newsletter, we have an exciting mix of developments: Microsoft’s new AI tool for correcting hallucinations, updates to Google’s Gemini models, key insights from TOKEN2049 on AI and Web3, the launch of AI Meta’s lightweight models for edge devices, and a powerful open-source platform for evaluating LLM applications.

🧞♂️dAGI hack: Web3 х AI online hackathon

Calling all AI x Web3 builders! Ready to hack the future and win $50K?

From November 7 to December 16, join 500+ builders in 5 weeks of innovation.

Win bounties, connect with top mentors, and tackle challenges in decentralized governance, AI-driven content moderation, innovative Web3 gaming, and more.

Participate online from anywhere!

Google Introduces Updated Gemini 1.5 Models

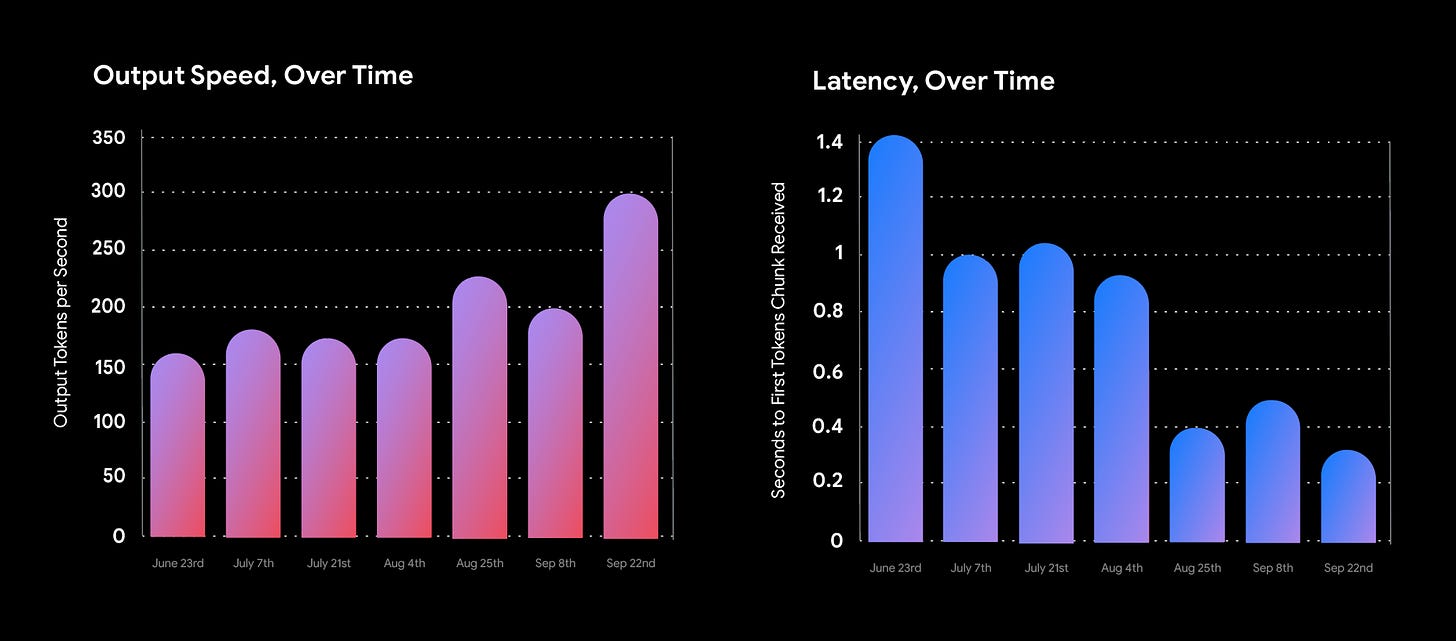

Google has rolled out two major updates to its Gemini models—Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. These models deliver significant improvements across various tasks, such as text processing, code generation, and vision.

What’s New in Gemini 1.5:

50% reduced price on Gemini 1.5 Pro models for inputs/outputs <128K tokens.

2x higher rate limits on Flash models and 3x higher rate limits on Pro models.

Faster outputs—up to 2x faster with 3x lower latency.

Improved accuracy in handling math, long-context tasks, and visual understanding.

Shorter output length (~5-20%) to align with developer feedback for more concise, efficient responses.

Improved benchmarks: A ~20% boost in math tasks and ~7% improvement in multi-modal tasks.

Key Enhancements:

Better long-context understanding: Gemini 1.5 can now handle 1000-page PDFs and complex code repositories with over 10 thousand lines of code.

Increased accuracy in visual tasks: The model performs better in vision tasks, with ~2-7% improvements in image and video processing.

Enhanced AI safety: The new models reduce refusals and unhelpful responses, ensuring safer outputs in real-world use cases.

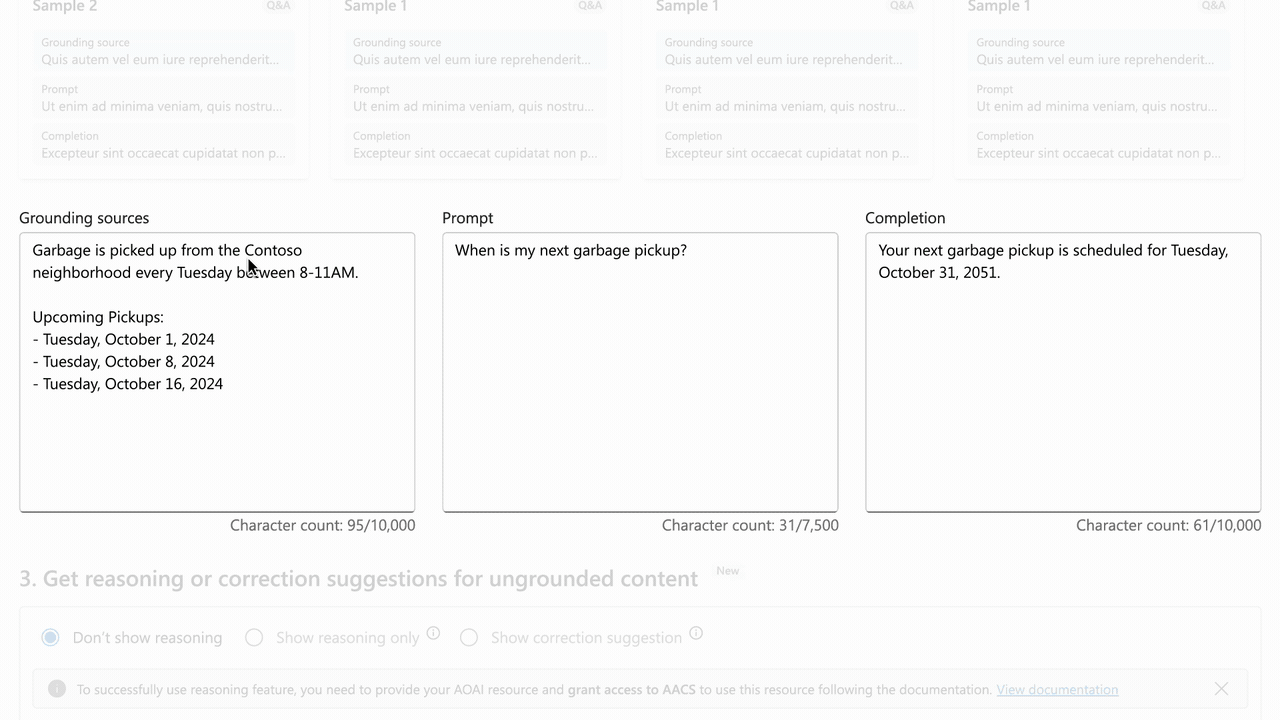

Microsoft Unveils AI Tool to Correct Hallucinations in Real-Time

Microsoft has introduced a feature to address the growing concern of AI hallucinations. This new tool, called Correction, is designed to detect and correct such issues in real-time, helping improve the overall accuracy of AI-generated content.

Key Features of Microsoft’s Correction Tool:

Real-time correction of hallucinations as they occur

Integrated with Azure AI Content Safety API for added security

Focused on enhancing groundedness by referencing external documents

How it Works:

AI applications connect to grounding documents and are commonly used in document summarization and RAG-based Q&A scenarios.

When an ungrounded sentence is detected, a request is sent to the generative AI model for a corrected version.

The LLM compares the ungrounded sentence with the grounding document. The sentence is either rewritten or filtered out if the content doesn't match.

The model rewrites sentences that don’t fully align with the source, ensuring higher accuracy and reliability.

TOKEN2049 Keynote: The Future of AI is Collaborative Agents

At TOKEN2049 in Singapore, AI x Crypto was highlighted as one of the most exciting verticals to watch. A particularly compelling keynote, titled "The Future of AI is Collaborative Agents," by Ron Bodkin, Co-Founder and CEO of Theoriq, showcased the potential of AI agents to revolutionize industries.

Takeaways:

Vision for Collaborative AI Agents: In the future, multiple AI agents will work together to perform tasks more efficiently, creating a powerful network of specialized agents.

Web3 Principles in AI Development: By incorporating Web3 values like decentralization, community ownership, and open innovation, AI can be developed in a way that benefits everyone, not just large corporations.

Collaborative Agent Ecosystem: Theoriq is building an open, permissionless ecosystem where developers and users can create and teach AI agents to collaborate. This allows for a more inclusive AI development process.

Real-time Data Access & API Interactions: These agents can interact with real-time data, execute code, and work with APIs, making them useful for everything from blockchain analysis to automated trading and data-driven decision-making.

Collectives of Specialized Agents: Like teams in a company, specialized AI agents will collaborate to solve complex problems, enhancing both accuracy and efficiency.

Role of Web3: Web3 technologies, such as smart contracts and token incentives, will be integral in coordinating the work of these agents, ensuring transparency, fairness, and accountability.

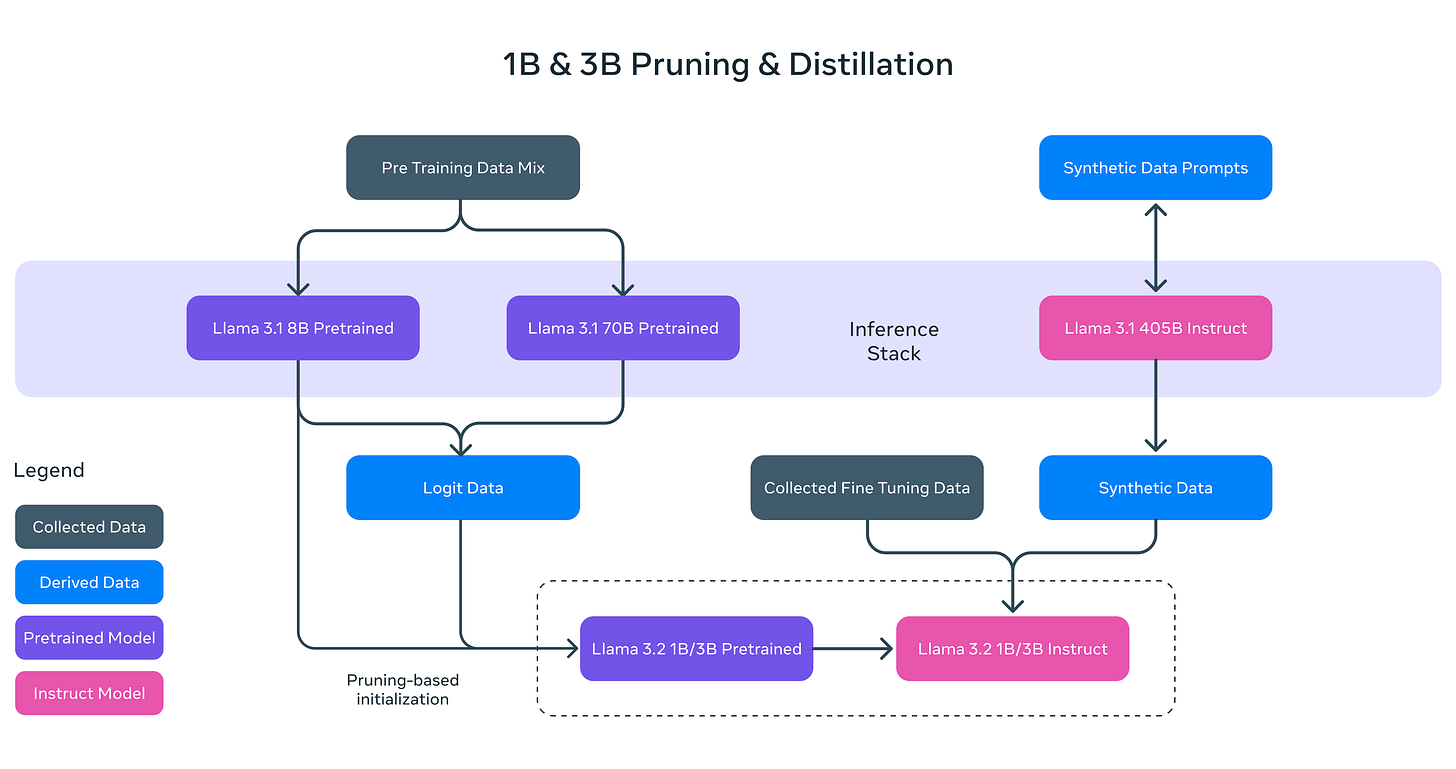

Meta Announces Llama 3.2 Models: Optimized for Edge Devices and Vision

Meta’s AI team has officially launched Llama 3.2, an upgraded version of their LLMs with significant improvements across edge devices, vision tasks, and agentic applications.

What’s New in Llama 3.2:

Lightweight models: Llama 3.2 introduces 1B and 3B models optimized for edge devices, including support for Qualcomm, MediaTek, and Arm processors.

Vision LLMs: The 11B and 90B models offer state-of-the-art performance in vision tasks, surpassing some of the closed models like Claude 3 Haiku.

Multimodal support: New Llama Guard models are optimized for multimodal use cases, improving edge deployments.

Features:

Edge deployment: Lightweight models fit onto mobile devices, enabling on-device processing for tasks like summarization, instruction following, and content rewriting.

Llama Stack: Meta also introduced the first official distribution of the Llama Stack, simplifying how developers and enterprises build agentic applications.

Ecosystem support: Partners like AWS, Google Cloud, Dell Technologies, and Intel are working to integrate Llama 3.2 models into their platforms for easier deployment.

Opik: Open-Source Platform for Testing LLM Applications

Opik, created by Comet, is an open-source platform for AI developers that helps evaluate, test, and monitor large language model applications.

Key Features of Opik:

Hallucination Detection: Track and correct inaccuracies in LLM outputs.

RAG Evaluation: Easily evaluate Retrieval-Augmented Generation applications.

Context Recall: Measure how well models retain context over long interactions.

Answer Relevance: Ensure your LLM delivers answers that are directly related to user queries.

Test Case Management: Store and run test cases to ensure consistent performance.

Development and CI/CD Integration:

LLM Tracing: Track every interaction with your LLM in both development and production environments.

Feedback Annotation: Annotate LLM responses using Opik’s Python SDK or UI to log user feedback.

Automated Evaluation: Store datasets, run experiments, and automate the testing of LLM applications.

CI/CD Integration: Seamlessly integrate with your CI/CD pipeline using PyTest.

Opik offers a complete toolkit for developers looking to improve their LLMs through rigorous testing, evaluation, and monitoring.

That wraps it up for today! 👋 But before you go...

Check out our LinkedIn, Facebook or Twitter pages for more details. Follow us to stay updated on all the latest news!

Best,

Epic AI team.